Are AI and Education a Good Match? Jala University’s Perspective

By Rolando Lora, from the Research and Development team at Jala University.

Generative AI has indeed dominated conversations for the past year, evoking a spectrum of sentiments from skepticism to a mixture of optimism and pessimism. As we reflect on the past year, it becomes evident that the world has witnessed the practical applications and far-reaching effects of Artificial Intelligence in various aspects of our lives.

Amid this technological evolution, one burning question lingers: What does AI mean for education?

At Jala University, an institution deeply committed to education and growth, we have been actively involved in working with, researching, and developing educational strategies that leverage this technology. As a result, we have formed our own opinions on the matter. We are confident that it has profound potential to reshape both software development and education. In this blog post, we will delve into the most important aspects of Generative AI and share our insights regarding its use in education. Let’s get started!

Understanding the trend

While most users are already witnessing the capabilities of applications like ChatGPT, Midjourney, and Dall-e 3, transforming daily workflows with significant productivity improvements, the perspective from within the field is even more astonishing.

For those directly involved in Generative AI, the pace of development is breathtaking. New models, breakthroughs, and advancements in infrastructure tools and platforms are emerging at an unprecedented rate, with notable progress occurring almost weekly. This rapid evolution highlights not only the immediate impact on everyday tasks but also the far-reaching potential of the field in a not-so-distant future.

To illustrate the rapid pace of development in Generative AI, consider the advancements made in early December 2023. Google announced Gemini, a groundbreaking multimodal model, challenging GPT-4. Concurrently, Mistral AI unveiled Mixtral 8x7b-32k, the first open-source ‘mixture of experts’ model, through a cryptic tweet.

Together AI released StripedHyena-7B, a very competent model deviating from traditional transformers architecture. Furthermore, Cornell researchers introduced ‘QuIP#’, a technique for compressing 16-bit models to 2-bit representations with minimal performance loss. These developments, just a snapshot of ongoing weekly progress, highlight the field’s dynamic evolution.

Terms like “multimodal”, “transformers”, “mixture of experts”, and “quantization” might be new to some readers. To make our discussion more accessible, we will narrow down our focus to the basics. So, let’s start with a fundamental question: What is Generative AI?

Understanding generative AI

Generative AI refers to artificial intelligence systems that can generate new content.

This includes everything from writing text, composing music, to generating realistic images or videos. A significant breakthrough in this field came with the advent of transformer-based architectures and deep learning techniques, which have greatly enhanced the capabilities and efficiency of these AI systems.

When referring solely to text, we use the term Large Language Models or LLMs. For instance, GPT-4 is a large language model. So are the LLaMA models (developed by Meta), Claude-2 (developed by Anthropic), PaLM-2 (developed by Google), Mistral (developed by Mistral AI) and so many others that currently compete for the highest spots in the Chatbot arenas.

They are called large, because their deep learning architecture consists of something that resembles a vast network of neural connections, akin to a simplified model of the human brain. These networks, composed of billions of parameters, are trained with one apparently simple goal: Predict the next token.

The training stage for a competitive LLM is expensive and very challenging. For a good introduction you can check out Andrej Karpathy’s youtube video “Intro to Large Language Models”.

However, at its core an LLM consists of:

- A compressed representation of the world, as derived from its training text data.

- A highly effective next token predictor.

These two capabilities make LLMs efficient knowledge retrieval tools. This is true provided the knowledge was present in their learning corpus and was neither diluted nor inconsistent.

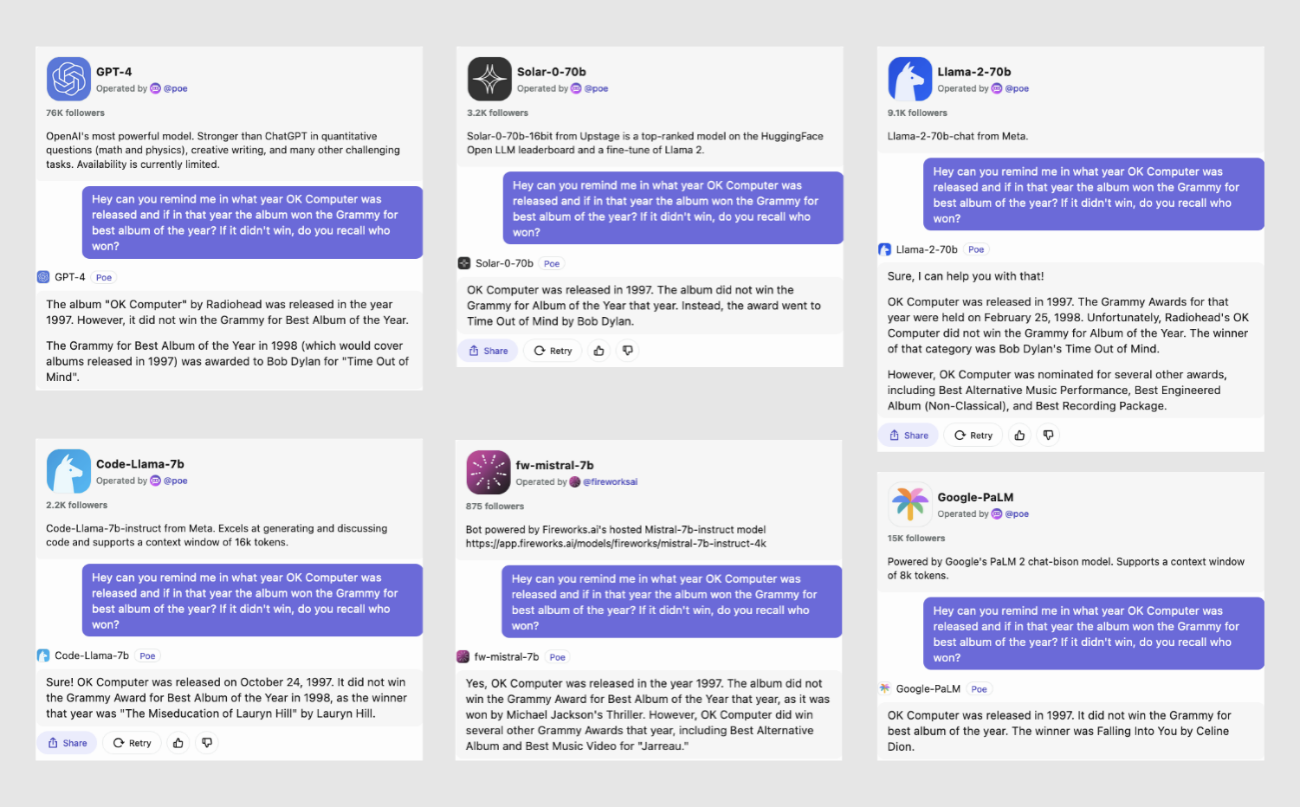

When we evaluated the performance of various AI models asking them the question “when was the release year of Radiohead’s ‘OK Computer’?, and the Grammy Award for that year?”, we got some interesting results:

Most models correctly identify 1997 as the release year. The top three models – GPT-4, Solar, and Llama-2-70B – also accurately note that Bob Dylan won the Grammy for Best Album in that year. However, with the exception of GPT-4, most models demonstrate inaccuracies or mix up facts when providing additional historical information, illustrating the challenges in achieving perfect factual consistency in AI responses.

The second capability that we can observe is that models are sometimes dreamers!

Since they are trained to basically predict the next token and they have a compressed version of the world, they will generate plausible looking prompt completions. On the positive side, this trait underscores the imaginative aspect of AI.

It turns out that some of these models, in particular GPT-4, but also models like Mistral, Starcoder, Replit, Phind, CodeLlama and others, are very good at coding. And by very good, we mean they can not only understand and debug complex code but also write functional, efficient programs across various languages.

They demonstrate an ability to grasp programming concepts, follow logical structures, and even offer creative coding solutions that can rival experienced human programmers.

These types of AI have therefore become very handy tools in our everyday lives. But what does this mean for the future of education? The challenge presented here is not only to discern whether something has been done by students or by AI, but also to consider whether it is necessary to learn these skills in today’s world.

Jala’s take on generative AI

From our position at Jala, with a solid background in software development and education of over two decades, we recognize the critical importance of understanding Generative AI’s impact.

Jala University, a cornerstone in our vision, represents our commitment to those trusting us with their education and to our region. In this light, understanding how Generative AI will reshape both the software industry and the educational landscape becomes not just relevant, but essential for us.

Over the past year (2023) we saw glimpses of the enormous potential that this technology brings to the software development process. Our software engineers learned to embrace the tools and use them responsibly to maximize their productivity. We also experienced many pains and witnessed the frustration of trainers and students when the technology seemed to be turning against us.

So, after a lengthy introduction, and without trying to be too speculative on what the future might bring, we want to share key insights that encapsulate our position on Generative AI.

1. Generative AI is here to stay; educators and students must embrace it proactively.

It is important to expose our students to the technology as early as possible (ideally during the first semester).

2. We (University) must slightly re-balance our student outcomes towards human-centric (people) skills.

Over the past 20 years, our educational journey has been marked by a shift away from traditional models focused on memorization and repetition, towards nurturing critical thinking and problem-solving abilities.

As we walk (or run) towards a world where Generative AI complements our abilities on a daily basis, knowledge skills will be less relevant, and human-centric skills will be significantly more important.

We’re already very aligned with this path, but we must double our efforts so that our students can maximize their skills in critical thinking, collaboration, communication, resilience, problem solving, creativity, emotional intelligence and the pursue of lifelong learning.

Paradoxically, Generative AI can help us or play against.

3. Leverage Generative AI tools towards the accomplishment of our student outcomes.

Concretely:

a. Explore and implement personalized learning using AI Tutors that can complement the learning experience in our courses while adapting to each student’s strengths, weaknesses, interests, and learning styles.

b. Explore and implement highly efficient real-time language translation supportreducing the communication gap that sometimes we encounter across English, Spanish and Portuguese native speakers.

c. Explore and implement automated AI feedback systems.

d. Ensure that the technology is accessible and available to students and educators.

4. Continuous Reevaluation and Adaptation.

Regularly revisit and reassess our educational strategies to ensure they remain aligned with the latest developments in Generative AI. This involves adapting our curriculum, teaching methodologies, and tools to stay at the forefront of AI-driven educational practices

Conclusion

Our commitment to education and to our region is stronger than ever. Our journey with Generative AI is just beginning, and we anticipate further integrating these technologies to enhance learning and research. Together, we step into a new era of education at Jala University, ready to innovate and lead in an AI-augmented world.

This article was enhanced with insights and proofreading assistance from frontier AI models, including OpenAI’s GPT-4 and Anthropic’s Claude-2 100K.